Word Embedding For Name Matching

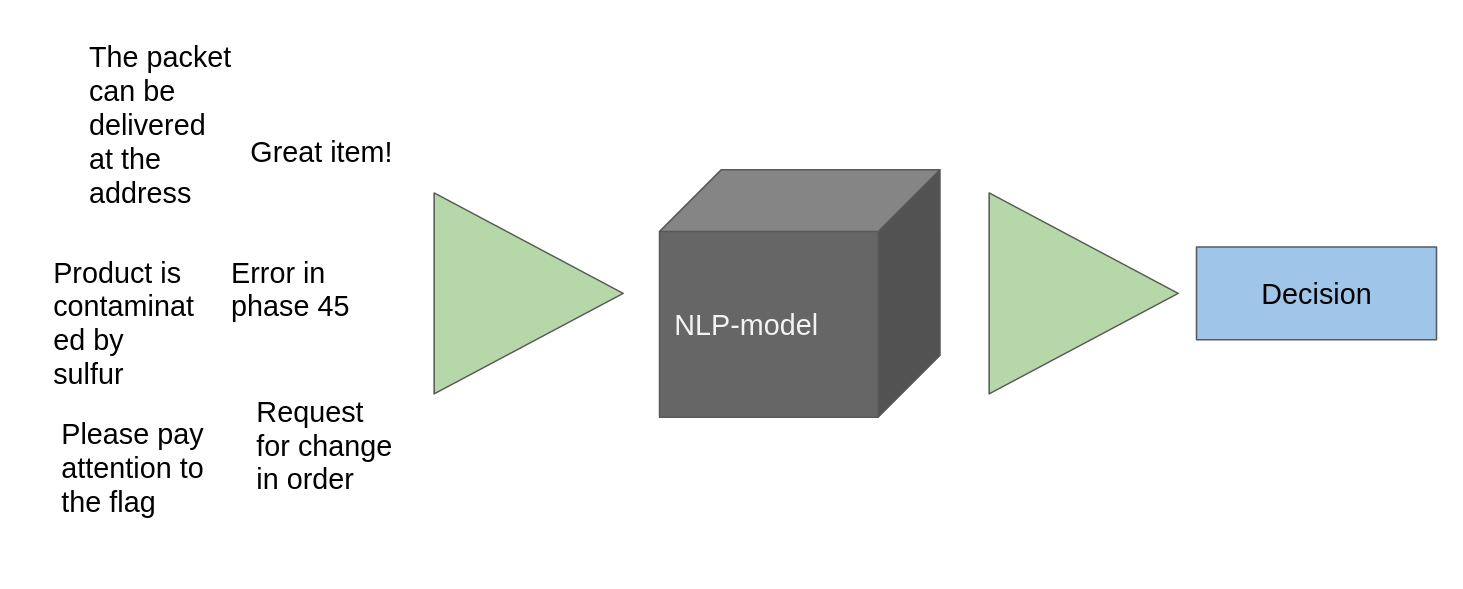

Along with that it also suggests dissimilar words as well as most common words. Name Matching Algorithms The basics you need to know about fuzzy name matching.

And then you you count the number of words it have in common with another name and you add a number of words thresold before it is considered similar.

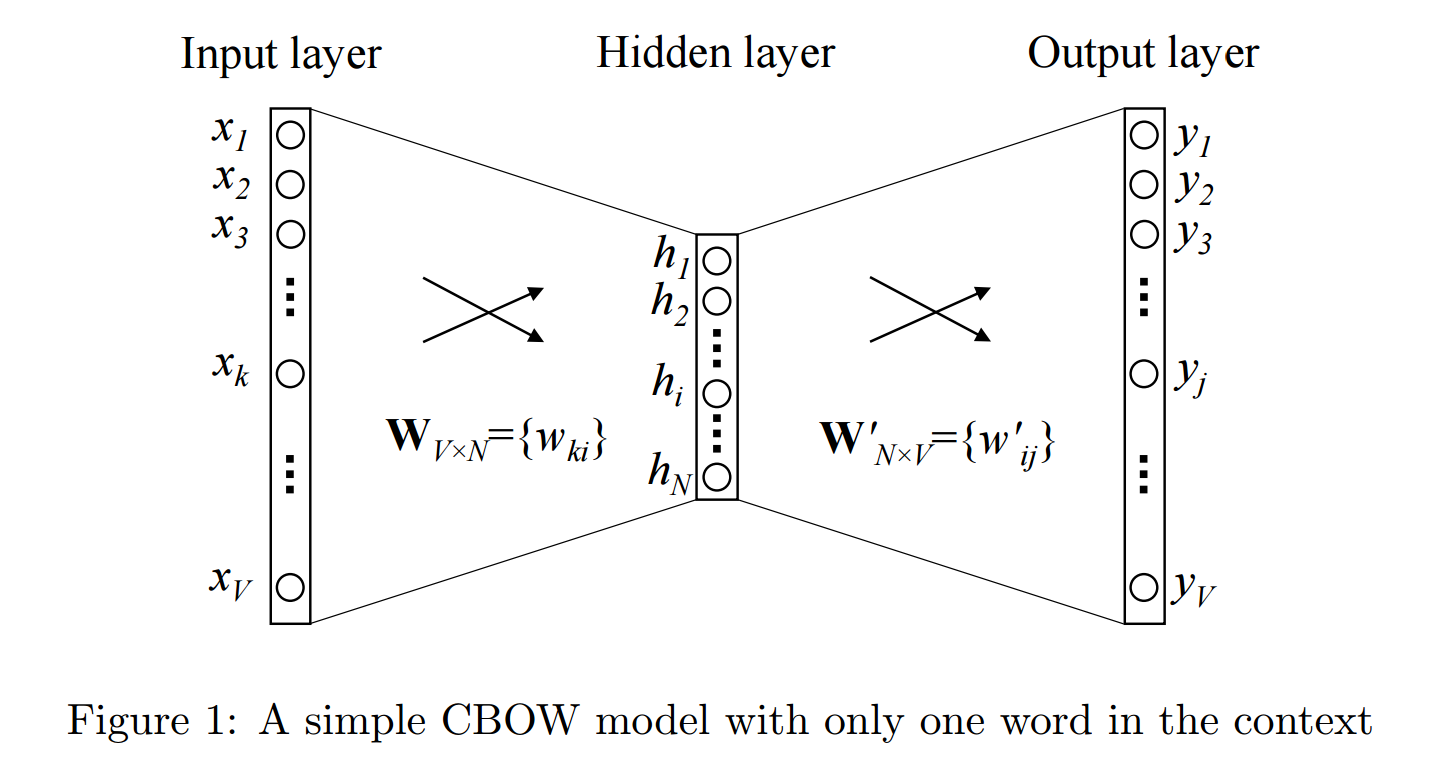

Word embedding for name matching. Word Embedding is a language modeling technique used for mapping words to vectors of real numbers. Width of the word embeddings. Create a group of related words.

It is just for fun. Compared to using regular expressions on raw text spaCys rule-based matcher engines and components not only let you find you the words and phrases youre looking for they also give you access to the tokens within the document and their relationships. Name of the embedding table.

Word embedding is used to suggest similar words to the word being subjected to the prediction model. When setting ratio 70 over 90 of the pairs exceed a match score of 70. Every word embedding is weighted by aa pw where a is a parameter that is typically set to 0001 and pw is the estimated frequency of the word in a reference corpus.

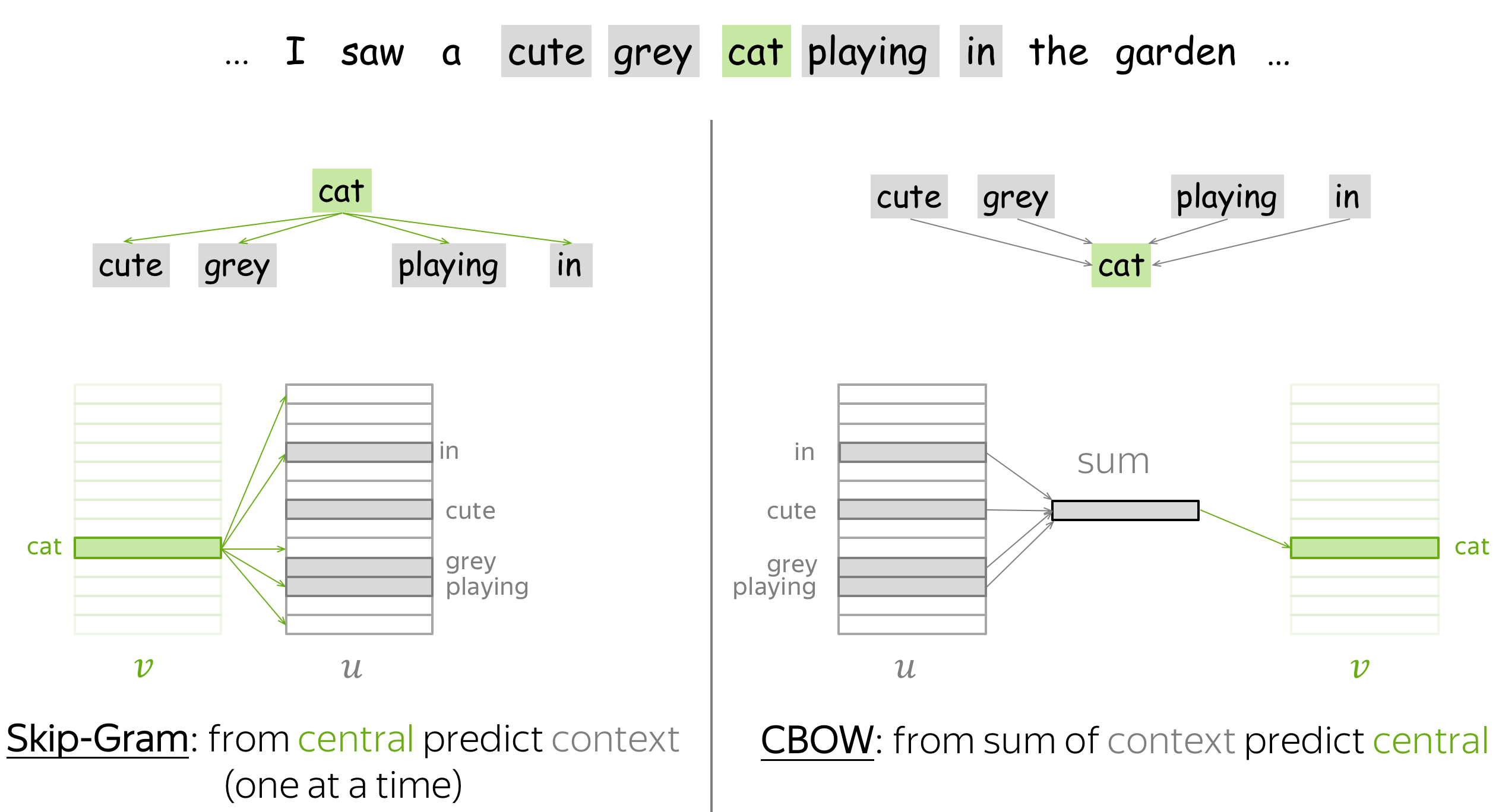

Word embedding and Word2Vec. This is a trending name matching test from Japan. Some popular word embedding techniques include Word2Vec GloVe ELMo FastText etc.

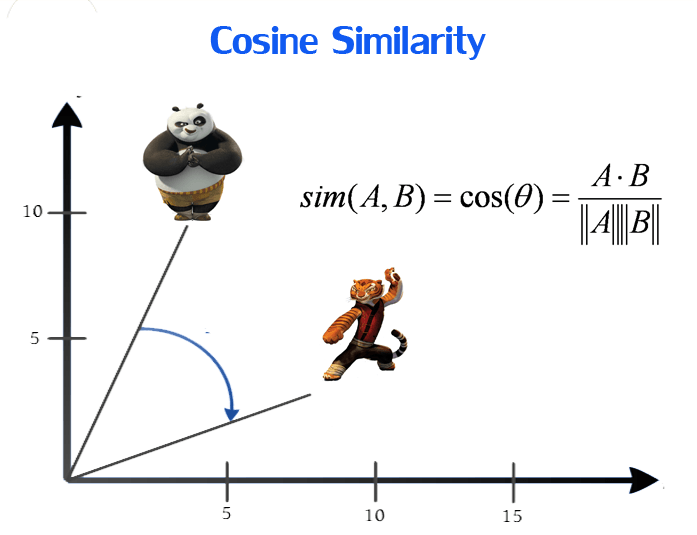

It is just for fun. It is used for semantic grouping which will group things of similar characteristic together and dissimilar far away. If True use one-hot method for word.

Httpgfuelly2XpexttGet yours today use code MUSTY at checkout to get 30 off your order for a limited timeIn today. It represents words or phrases in vector space with several dimensions. If False use tfgather.

Emb trainWordEmbeddingdocuments trains a word embedding using documents by creating a temporary file with writeTextDocument and then trains an embedding using the temporary file. What you can do is separate the words by whitespaces commas etc. Get all the embedding filenames that match a given pattern.

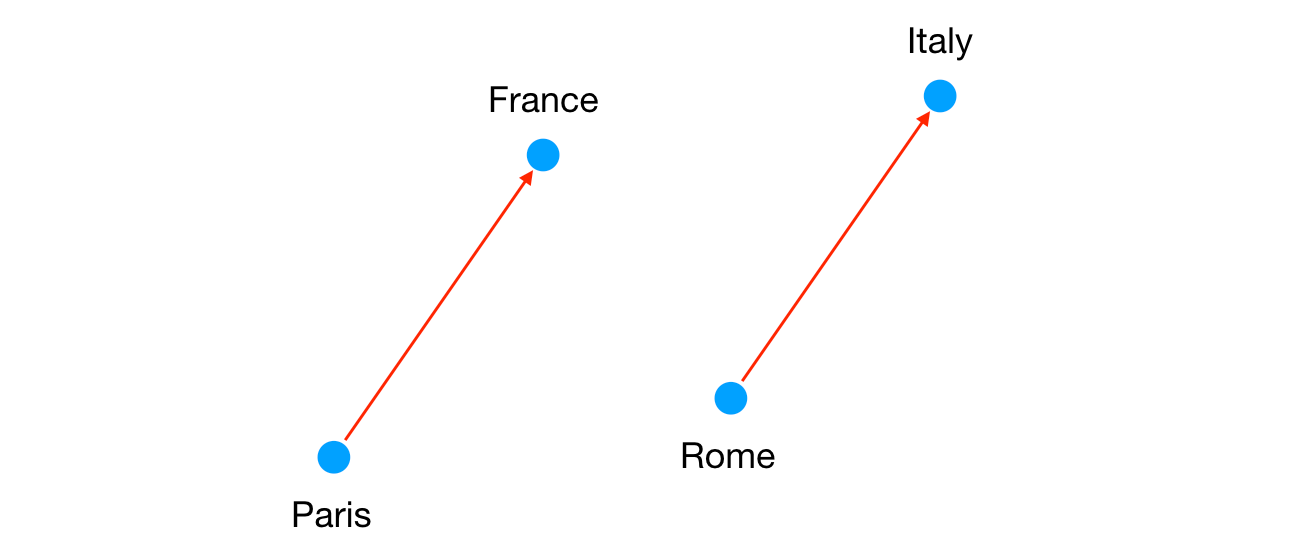

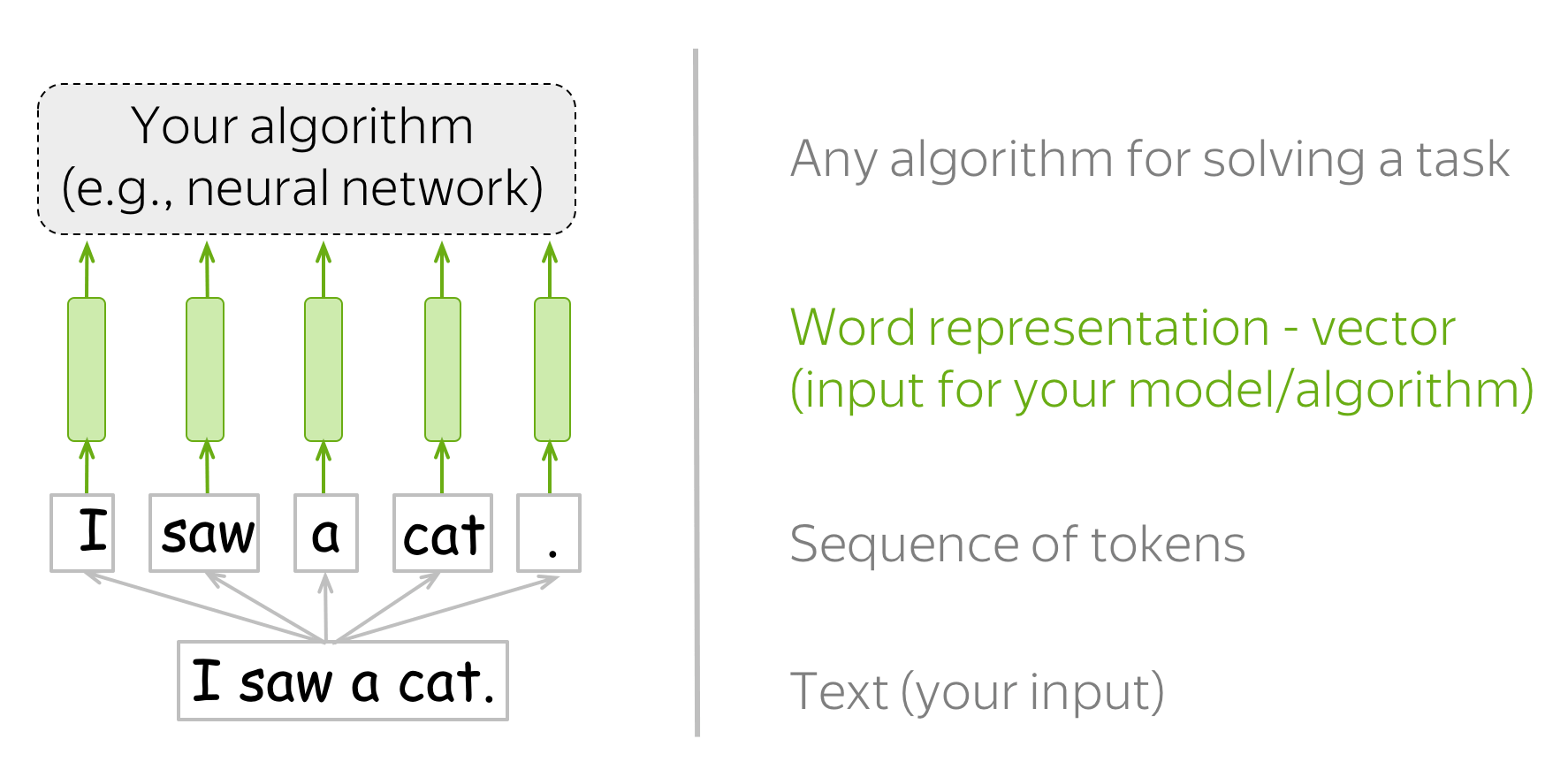

The other way is to do the same thing but take the words. In natural language processing Word embedding is a term used for the representation of words for text analysis typically in the form of a real-valued vector that encodes the meaning of the word such that the words that are closer in the vector space are expected to be similar in meaning. Iterate through the tfExample instances in the TFRecord file.

Commonly used for organization names. This is a trending name matching test from Japan. Word embeddings can be generated using various methods like neural networks co-occurrence matrix probabilistic models etc.

Float Tensor of shape batch_size seq_length embedding_size. Word embedding involves creating better vector representations of words both in terms of efficiency and maintaining meaning. The underlying concept is to use information from the words adjacent to the word.

Turns each word into a numerical vector based on its semantic meaning and calculates the similarity of two words in a multidimensional space. For each embedding file. Compute similar words.

Sight word matching game - Word Matching Game - Picture Word Matching Game - Barton 59 Sight Word Matching Game - ch Word List Matching Game. Jupyter notebook can be found on Github. Example emb trainWordEmbedding ___ NameValue specifies additional options using one or more name-value pair arguments.

Word Embeddings for Fuzzy Matching of Organization Names Rosettes name matching is enhanced by word embeddings to match based on semantics as well as phonetics Tracking mentions of particular organizations across news articles social media and internal communications is integral to the workflow of dozens of use-cases across industries. Read the string_identifier the ID and add it. This video is sponsored by GFuel.

For instance a word embedding layer may involve creating a 10000 x 300 sized matrix whereby we look up a 300 length vector representation for each of the 10000 words in our vocabulary. Word embeddings can be obtained using a set of language modeling and feature learning techniques where words or. The Name Match Test.

Understand Self Attention In Bert Intuitively Understanding Context Sentences Different Sentences

Understand Self Attention In Bert Intuitively Understanding Context Sentences Different Sentences

Understanding Word Embeddings From Word2vec To Count Vectors

Understanding Word Embeddings From Word2vec To Count Vectors

Playing With Word Vectors In The Last Few Years The Field Of Ai By Martin Konicek The Startup Medium

Playing With Word Vectors In The Last Few Years The Field Of Ai By Martin Konicek The Startup Medium

Introduction To Word Embedding And Word2vec By Dhruvil Karani Towards Data Science

Introduction To Word Embedding And Word2vec By Dhruvil Karani Towards Data Science

How To Apply Bert To Arabic And Other Languages English Words How To Apply Language

How To Apply Bert To Arabic And Other Languages English Words How To Apply Language

Name Matching Techniques With Python In 2021 Machine Learning Methods Learning Methods Semantic Meaning

Name Matching Techniques With Python In 2021 Machine Learning Methods Learning Methods Semantic Meaning

Introduction To Word Embedding And Word2vec By Dhruvil Karani Towards Data Science

Introduction To Word Embedding And Word2vec By Dhruvil Karani Towards Data Science

Beam Search Attention For Text Summarization Made Easy Tutorial 5 Easy Tutorial Tutorial Summarize

Beam Search Attention For Text Summarization Made Easy Tutorial 5 Easy Tutorial Tutorial Summarize

Comparison Between Bert Gpt 2 And Elmo Architecture Model Nlp Sentiment Analysis

Comparison Between Bert Gpt 2 And Elmo Architecture Model Nlp Sentiment Analysis

Understanding Word Embeddings From Word2vec To Count Vectors

Understanding Word Embeddings From Word2vec To Count Vectors

Deep Nlp Word Vectors With Word2vec Nlp Deep Learning Words

Deep Nlp Word Vectors With Word2vec Nlp Deep Learning Words

Word Embeddings What Are They Really By Kristin H Huseby Towards Data Science

Word Embeddings What Are They Really By Kristin H Huseby Towards Data Science

Character Level Word Embedding Using Cnn And An Overview Of Download Scientific Diagram

Character Level Word Embedding Using Cnn And An Overview Of Download Scientific Diagram

Examining Bert S Raw Embeddings Common Nouns Syntactic Nlp

Examining Bert S Raw Embeddings Common Nouns Syntactic Nlp

Post a Comment for "Word Embedding For Name Matching"